MAN IT'S SLOW

Performance Comparison Between My Current Desktop And An Old HP

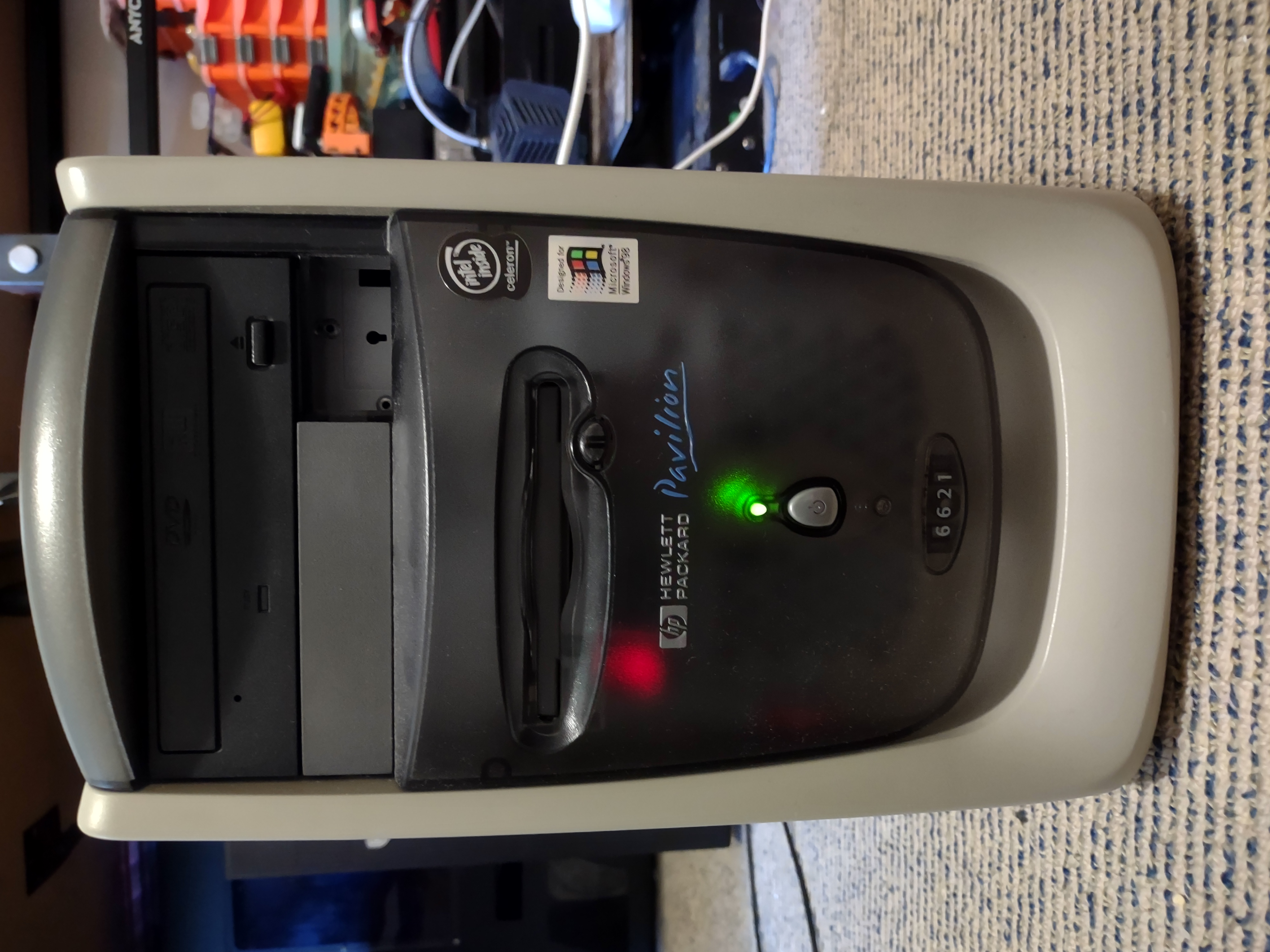

A while ago, I bought a HP Pavilion 6621 off of eBay. I am not sure when the computer was released; however, if I had to guess I would say it would have been some time around the late 1990s. I thought I'd see how quickly it can build the current build of a project I have been working on.

Mysterious Current Project! CLICK HERE!?

It's specifications don't look very good on paper compared to my current desktop.

| Year | CPU | CPU Speed (GHz) | No. of Cores | No. of Threads | Mem Type | Mem Capacity (GB) | Mem Speed (MT/s) | CAS Latency | Boot Drive | |

|---|---|---|---|---|---|---|---|---|---|---|

| ~ Current Year Desktop | 2021 | AMD Ryzen 9 5900X | 3.7 (base), upto 4.8 | 12 | 24 | DDR4 | 32 | 3600 | 16 | Samsung SSD 980 PRO 500GB |

| HP Pavilion 6621 | ~ 1999 | Intel Celeron | 0.59820 | 1 | 1 | PC133 SDRAM | 0.375 | 133 | 3 | Samsung Lenovo 45K0639 SATA 3 SSD 128GB |

Some Info

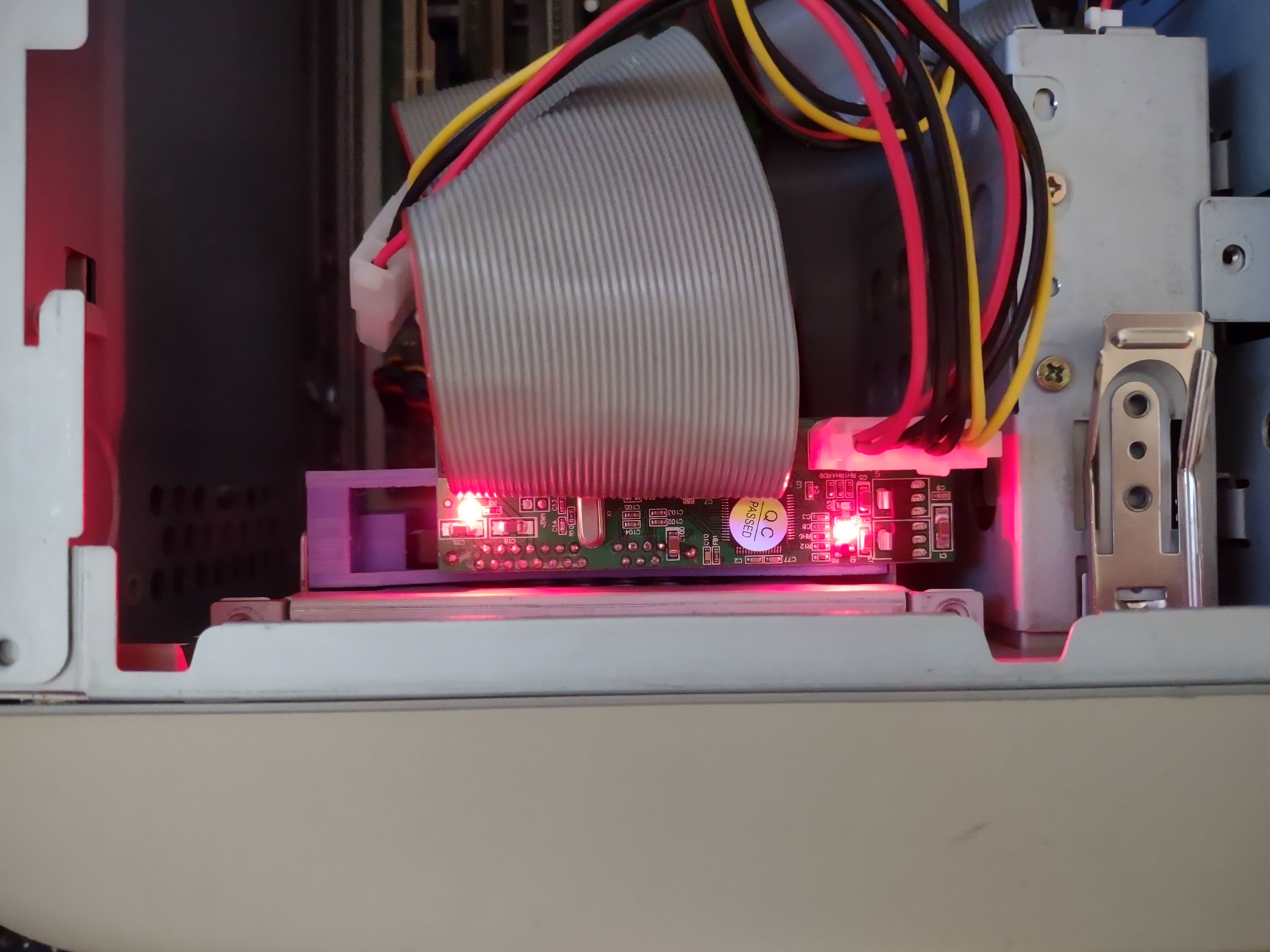

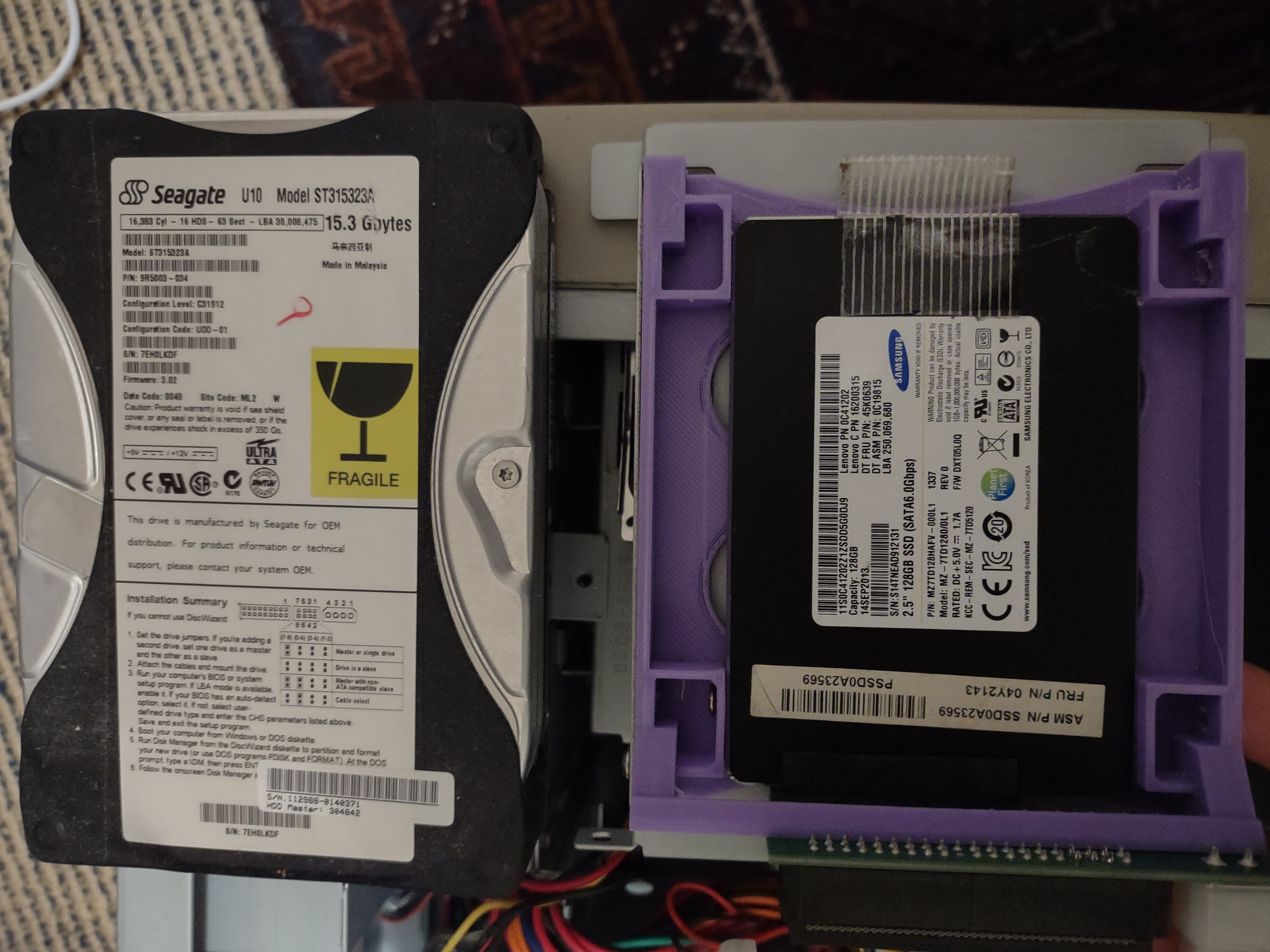

Here you can see some photos of the machine in all of its glory. It

originally came with a 15GB IDE HDD, but because it was hard to

install any newer OSs on the machine since the BIOS wouldn't boot from

DVDs or USBs (obviously) I decided to use a SATA SSD with it and install

the OS on another computer. I designed a bracket to hold the SSD in the

original HDD bracket. You can see it in the photos. It's the purple

thing. You could argue that this will skew the benchmarks a bit, however

what I am doing is almost certainly very compute bound. The total size

of the source files for my mystery project is currently 522KB or

0.510MB, and when looking at the results it's clear that the time to

read this even on the slower machine would be a negligible percentage of

the total time and the compression algorithm is only about 1KLOC of

Java. So, I don't see it as a big problem. I am just trying to get some

rough numbers here anyway, as to get a clearer idea of the performance

characteristics of both machines and the delta between them, I would

need to run a larger number of more varied benchmarks.

I installed

FreeBSD 14 on the HP machine along with the libraries required to build

the aforementioned project, namely, these are SDL (including the SDL

libraries for handling images, sound and fonts) and OpenCL. I also

installed OpenJDK to build the compression program.

I am running FreeBSD 14 on my current desktop too, so the software

environment should be the same (for the most part.)

I have decided to compile the mystery project on my new machine using 1

thread as well as using all 24 threads.

It must be noted, however, that the project only has 7 .cpp files. It is

my understanding that this means there will be only 7 translation units

and so I probably won't benefit from using more than 7 threads. Also,

two or three of the files are thousands of lines long with the other

files being notably smaller. So, I am definitely not using my new

machine to its full potential in this matchup against my old HP. But

then again, I think it is, in some sense, a fair comparison, because I

am taking advantage of the thread-level parallelism in my newer machine,

but only to a limited extent and because of Amdahl's law and the fact

that a lot of programs are only lightly threaded, it seems like a

relatively fair comparison. That is to say, while my newer machine

probably has a lot more peak performance than these numbers would

indicate, because this performance has to be explicitly taken advantage

of (generally) most programs don't (or can't) use all of it, I think

it's fair to not use all of it in this test.

I did three runs took the average of them for each test.

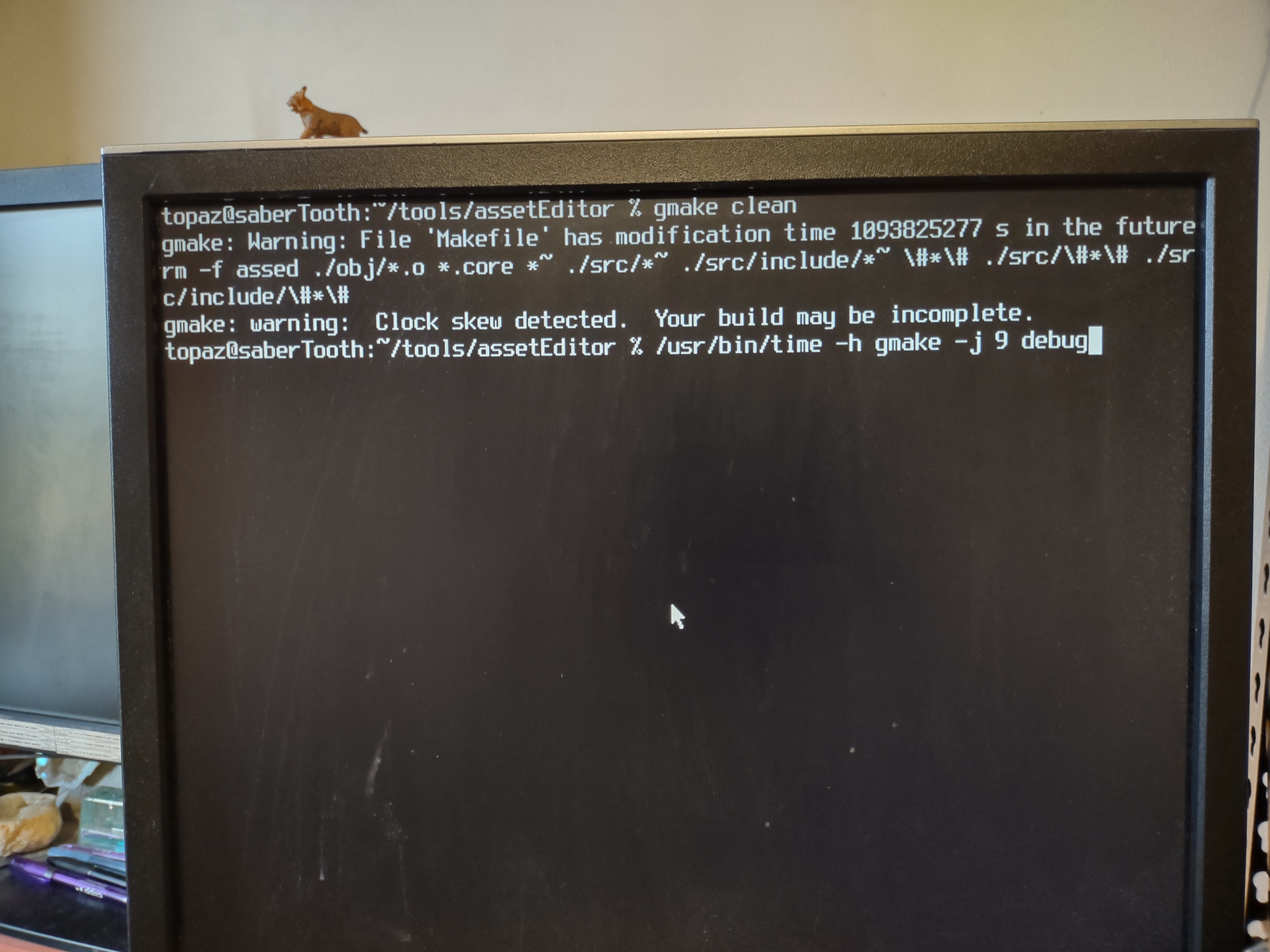

The commands used to build the programs and measure the time taken are

as follows (note that RunLengthCompression does more than just RLE):

/usr/bin/time -h gmake -j 1 debug

/usr/bin/time -h gmake -j 24 debug

/usr/bin/time -h javac RunLengthCompression.javaWith that said, I have compiled the results into a table, which can be seen below:

| Time 1st Run (min) | Time 2nd Run (min) | Time 3rd Run (min) | Average (min) | |

|---|---|---|---|---|

| ~ Current Year Desktop | ||||

| ____Mysterious Current Project (build time 1 thread) | 00.358 | 00.352 | 00.349 | 00.353 |

| ____Mysterious Current Project (build time 24 threads) | 00.097 | 00.098 | 00.090 | 00.095 |

| ____Nascent Compression Algorithm For Mysterious Current Project | 00.005 | 00.006 | 00.006 | 00.006 |

| HP Pavilion 6621 | ||||

| ____Mysterious Current Project (build time) | 36.620 | 36.171 | 39.956 | 37.582 |

| ____Nascent Compression Algorithm For Mysterious Current Project (build time) | 00.886 | 00.883 | 00.848 | 00.872 |

Vague Final Analysis

From the table above, it can be seen that the time taken to build the

mystery project on my current year machine is 106.465 times less than

on the venerable HP Pavilion.

For all 24 threads at once (as mentioned above, this is

somewhat deceptive) it is 395.6 times faster! If there were a lot more

translation units to compile, I think this number could easily be

doubled. So let's say the newer machine is probably AT LEAST ~99900%

faster than the Pavilion at this specific task.

When looking at the time to build the compression program, it is seen

that the advantage is even greater, with a 145.333x improvement. Note

that this program only consists of one file, and so the program was

only built with one thread on my newer machine.

Taking the lower bound of the newer machine's clock speed, and

dividing that by the Pavilions clock speed I get 6.185x. Thus, purely

from clock speed I could expect roughly a 6x improvement. However, the

newer machine is much wider and deeper than the old one and the actual

revealed performance increase is much larger, and so there is obviously

a lot of performance coming from undoubtedly myriad architectural improvements.

Date: 01/11/2024